What is Excel VBA Web Scraping?

Excel VBA (Visual Basic for Applications) web scraping refers to the process of extracting data from websites using VBA code in Microsoft Excel.

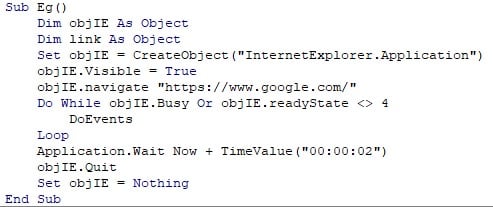

VBA Web scraping involves fetching and extracting data from web pages and VBA web scraping without a browser. By writing VBA code, you can instruct Excel to navigate to a website, retrieve specific information from the HTML source code, and then store that data in your Excel workbook. See a simple example of performing Web Scraping in VBA with the example below.

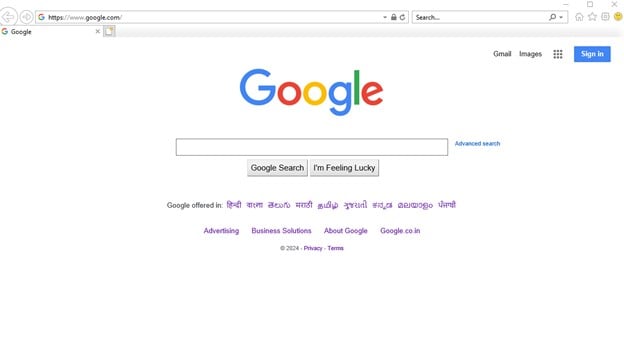

It opens a session of Internet Explorer by opening the link “google.com.” It waits for 2 seconds due to the Application.Wait function and then closes the session. The object used to open Internet Explorer is set to nothing to prevent any cache.

As seen above, the Google tab opens, loads in, and then closes after 2 seconds.

Table of Contents

Key Takeaways

- VBA coding is used for VBA web scraping in Excel.

- Internet Explorer or Selenium with EdgeDriver are commonly used for web automation.

- VBA interacts with the Document Object Model (DOM) to extract and manipulate web page elements. XPath or element IDs are used to locate and interact with HTML elements.

- We implement waiting mechanisms to ensure elements load in VBA Web Scraping before interacting.

How to perform Web Scraping in Microsoft Edge, Google Chrome and Mozilla Firefox?

Internet Explorer is generally used for Web Scraping in Excel VBA. There is no native support for other browsers, such as Google Chrome and Microsoft Edge, without third-party applications. To be able to perform VBA web scraping Microsoft Edge, you need to install Selenium Basic and select the support you need.

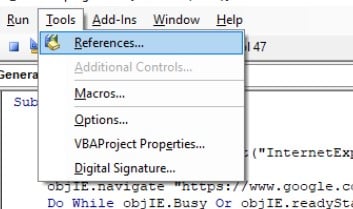

After installing, go to the VBA Editor and go to Tools > References in the VBA Toolbar.

Select “Selenium Type Library” to be able to do Web Scraping in Excel VBA.

How to Scrape Website Data using VBA?

Learn how to scrape data from the web in VBA by following the steps below.

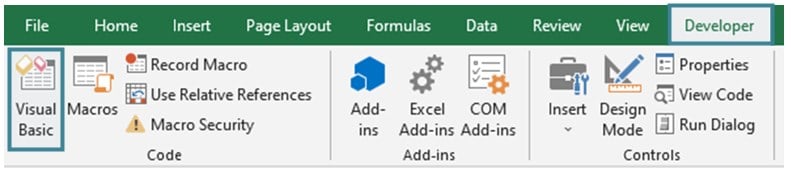

Step 1: Once you open the Excel Workbook, in the title bar, choose the “Developer” icon and click on it.

In Developer, select “Visual Basic.” It opens the VBA Editor. Initially, it will be empty.

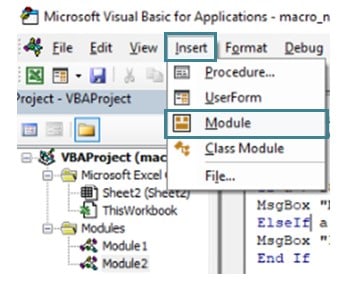

In the Editor, select “Insert” > “New Module” present in the toolbar. Now, you are ready to start coding in Excel VBA.

Step 2: Name the subroutine to check the presence of a word in any website and say whether it exists.

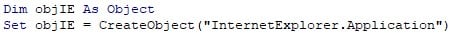

Step 3: Create a VBA Object and use it to open the Internet Explorer.

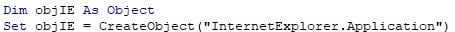

Step 4: Store the URL you want to search the word in a string variable.

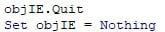

For this example, the VBA tutorial’s search result in Google is copied.

Copy the URL and paste it as a string in the variable.

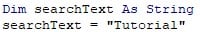

Step 5: Define the text you want to search on the webpage in a string variable.

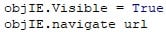

Step 6: Set the visibility of Internet Explorer as true. Then, make it go to the URL mentioned earlier.

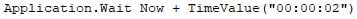

Step 7: Wait for 2 seconds for the page to load properly using the VBA Wait function.

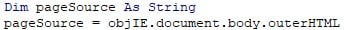

Step 8: Store the HTML of the webpage in a string variable. It will be used to search for the text.

Step 9: Check for the presence of the word on the webpage with an If condition.

The InStr function searches for the occurrence of the text to be searched within the text stored in the string, starting from the first character (position 1). The function returns the position of the first occurrence of searchText in pageSource. If not found, it returns 0.

vbTextCompare: It is a constant in the InStr function, which specifies a text comparison mode for InStr. In this case, it performs a case-insensitive search.

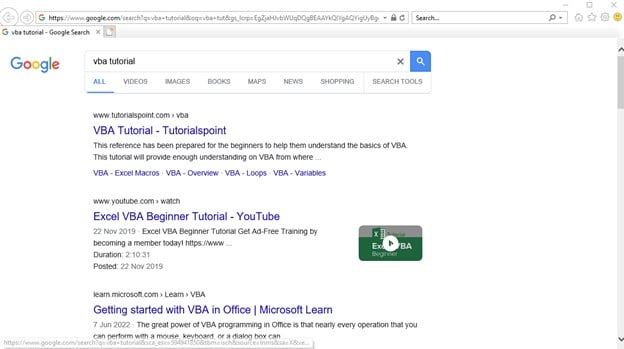

If the text is found, it then prints the result in the Immediate tab.

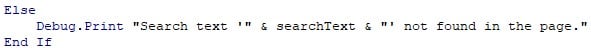

Step 10: In case the word is not found, and the InStr function returns 0, then the Else part of the If-Else condition is executed telling you that the word does not exist by printing it in the Immediate tab.

Step 11: Close the Internet Explorer session after everything is done. After that, you can set the Object to “Nothing” to empty any cache in the object, if any.

Code:

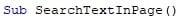

Sub SearchTextInPage()

Dim objIE As Object

Set objIE = CreateObject(“InternetExplorer.Application”)

Dim url As String

url = “https://www.google.com/search?q=vba+tutorial&oq=vba+tut&gs_lcrp=EgZjaHJvbWUqDQgBEAAYkQIYgAQYigUyBggAEEUYOTINCAEQABiRAhiABBiKBTINCAIQABiRAhiABBiKBTIHCAMQABiABDIHCAQQABiABDIHCAUQABiABDIHCAYQABiABDIHCAcQABiABDINCAgQABiRAhiABBiKBdIBCDQyODZqMGoxqAIAsAIA&sourceid=chrome&ie=UTF-8”

Dim searchText As String

searchText = “Tutorial”

objIE.Visible = True

objIE.navigate url

Application.Wait Now + TimeValue(“00:00:02”)

Dim pageSource As String

pageSource = objIE.document.body.outerHTML

If InStr(1, pageSource, searchText, vbTextCompare) > 0 Then

Debug.Print “Search text ‘” & searchText & “‘ found in the page.”

Else

Debug.Print “Search text ‘” & searchText & “‘ not found in the page.”

End If

objIE.Quit

Set objIE = Nothing

End Sub

Step 12: Click “F5” or the “Run” icon to open an Internet Explorer session with the URL you put.

After it closes, the result is printed in the Immediate tab.

Examples

See the different ways you can use VBA to perform Web Scraping below.

Example #1

Given a website, you want to find the total number of backlinks or link references present in it. You can do this by finding the HTML element ‘a’, which is used as a href to refer to another website. You can simply add a counter to it after that.

Step 1: Define a subroutine to find the number of links in a webpage.

Step 2: Create an Internet Explorer session by declaring a VBA Object.

Step 3: Get the URL you want to search for the number of links.

Step 4: Make the viewing of the session “True” and go to the URL specified using the “navigate” function.

Step 5: Wait for the webpage to finish loading and to continue the loop till then.

- objIE.Busy: Checks if Internet Explorer is still busy performing operations. It is True while a webpage is still loading or performing other activities.

- objIE.readyState <> 4: Checks if the readyState property of Internet Explorer is not equal to 4. The readyState property indicates the loading status of the document. A readyState of 4 means the document has completely loaded.

- DoEvents: Allows the operating system to process other events in the queue. It prevents the VBA script from becoming unresponsive during the waiting period.

- Loop: Continues looping until both conditions (objIE.Busy and objIE.readyState <> 4) are false, indicating that Internet Explorer has finished loading the webpage.

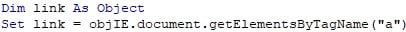

Step 6: Define another object and store the number of elements with the tag ‘a’ in href. It means that if you click on these, you will be redirected to another link.

Step 7: Print the number of links embedded in the webpage in the Immediate tab.

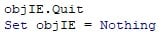

Step 8: Close the window and set the Object as “Nothing”.

Code:

Sub NumberOfLinks()

Dim objIE As Object

Dim link As Object

Set objIE = CreateObject(“InternetExplorer.Application”)

url = “https://www.excelmojo.com/vba/”

objIE.Visible = True

objIE.navigate url

Do While objIE.Busy Or objIE.readyState <> 4

DoEvents

Loop

Set link = objIE.document.getElementsByTagName(“a”)

Debug.Print “Number of links: ” & link.Length

objIE.Quit

Set objIE = Nothing

End Sub

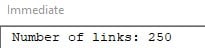

Step 9: Press “F5” to run the subroutine. The output is displayed as shown below:

Example #2

In this example, you will navigate through multiple sites through Web Automation and then close the application.

Step 1: Create a subroutine to go through multiple websites automatically.

Step 2: Create a new Internet Explorer session with a VBA Object.

Step 3: Define the first URL. You can go through the websites in any order.

Step 4: Define the second URL.

Step 5: Make the Internet Explorer session visible by setting it as “True”. Then navigate to the URL of your choice.

Step 6: Keep looping until the webpage is fully loaded, and then wait for 2 seconds on the website.

Here, the Busy and readyState values are used to check if the webpage is fully loaded or not. It is looped so that VBA does not go inactive.

Step 7: Then navigate to the second URL.

Step 8: Similarly, wait until the webpage has loaded and wait for 2 seconds.

The Busy component is used to check if the webpage is still loading.

readyState is used to check if the value 4 has been reached or not. If it isn’t, that means that the website is still loading. It means that despite any errors further in the subroutine, the Internet Explorer instance will still be running.

Step 9: Close the Internet Explorer session and set the Object in VBA to “Nothing” to clear it.

Code:

Sub WebScrapingExample()

Dim objIE As Object

Set objIE = CreateObject(“InternetExplorer.Application”)

Dim url As String

url = “https://www.google.com/”

Dim url1 As String

url1 = “https://www.github.com/”

objIE.Visible = True

objIE.navigate url1

Do While objIE.Busy Or objIE.readyState <> 4

DoEvents

Loop

Application.Wait Now + TimeValue(“00:00:02”)

objIE.navigate url

Do While objIE.Busy Or objIE.readyState <> 4

DoEvents

Loop

Application.Wait Now + TimeValue(“00:00:02”)

objIE.Quit

Set objIE = Nothing

End Sub

Step 10: Click the green arrow button to start the VBA web scraping session. First, the GitHub page will be opened. Then, the Google page will be opened. After 2 seconds, the Window will close.

Example #3

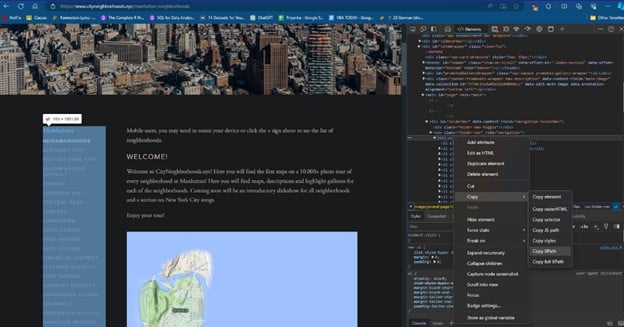

In this example, you will go through a website to print all the neighborhoods of Manhattan available on this website. With the correct HTML tag selection, copy the XPATH by pressing “Ctrl+Shift+I” to start the Inspect element. Then, copy all the content in the tags and print them in an Excel sheet using VBA.

Step 1: Create a sub-procedure to print the neighborhoods in Manhattan, NYC.

Step 2: Like in the previous examples, create a new Internet Explorer session with a new Object.

Step 3: Get the link to get all the names of the neighborhoods in Manhattan.

Step 4: Show the Internet Explorer session by setting “Visible” as “True.” Then, take the browser to the URL using the “navigate” function.

Step 5: Wait for the page to load by freezing the application for 5 seconds with the Application.Wait function.

Step 6: Get the HTML tag containing the locations of Manhattan and store them in an Object.

You can get the result element by opening “Inspect Element” and copying the XPath version of the table of contents.

If you copy it, you get the XPath value as ‘//*[@id=”folderNav”]/nav/ul’.

Using the “querySelector” component, write the id as #folderNav, then the content after the slashes ‘/’ with ‘>.’

querySelector(“#folderNav > nav > ul”)

Step 7: Define string and variant variables.

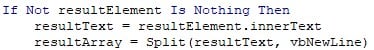

Step 8: Check if the result element is not empty using an If-condition.

Get the text from the XPath element and store it in a String variable. Store this text in the Variant variable where a line with the constant, vbNewLine, separates each element.

Step 9: Save the elements and dimensions of the matrix from the first row to the highest value in the result array. It is only if the array isn’t empty.

Step 10: Add a new worksheet to add the list of neighborhoods in Manhattan.

Step 11: Initialize an iterative variable and start a for-loop from the Lower Bound to the Upper Bound of the array.

LBound returns the first value of the array.

UBound returns the last value of the array.

It is beneficial in cases where you don’t know the size of your VBA array.

Step 12: Paste the elements in the array into the cells of the worksheet you’ve newly created. Then, continue the FOR-loop till all the elements are done.

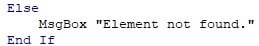

Step 13: Declare an Else condition in case there are no elements found in the webpage to prevent errors. Then, close the Internet Explorer session and set the Object to “Nothing” to clear its cache.

Code:

Sub ScrapeNYCNeighborhoods()

Dim objIE As Object

Set objIE = CreateObject(“InternetExplorer.Application”)

Dim url As String

url = “https://www.cityneighborhoods.nyc/manhattan-neighborhoods”

objIE.Visible = True

objIE.navigate url

Application.Wait Now + TimeValue(“00:00:05”)

Dim resultElement As Object

Set resultElement = objIE.document.querySelector(“#folderNav > nav > ul”)

Dim resultText As String

Dim resultArray As Variant

If Not resultElement Is Nothing Then

resultText = resultElement.innerText

resultArray = Split(resultText, vbNewLine)

If UBound(resultArray) > 0 Then

ReDim Preserve resultArray(1 To UBound(resultArray))

End If

‘ Print the list into one column in Excel

Dim ws As Worksheet

Set ws = ThisWorkbook.Sheets.Add

Dim i As Integer

For i = LBound(resultArray) To UBound(resultArray)

ws.Cells(i, 1).value = resultArray(i)

Next i

Else

MsgBox “Element not found.”

End If

objIE.Quit

Set objIE = Nothing

End Sub

Step 14: Run the above subroutine to print the neighborhoods in Manhattan, NYC into your Excel sheet.

Go to the Excel sheet newly created. All the locations in Manhattan are printed successfully.

Important Things To Note

- Check the website’s terms of service and robots.txt file for scraping permissions.

- Avoid sending too many requests in a short period to prevent IP blocking. This is common while performing VBA Web Scraping Microsoft Edge.

- Avoid circumventing login or access controls without proper authorization.

- Follow website rules and terms; do not proceed if scraping is explicitly prohibited.

Frequently Asked Questions (FAQs)

You can scrape websites using VBA for publicly accessible data, respecting the site’s terms of service. Avoid scraping sensitive or private information, and be aware that some websites may have measures to prevent scraping.

● Use Explicit Waits: Implement waiting mechanisms like Application.Wait to ensure elements load before interacting.

● Leverage ReadyState: Check the ReadyState property to confirm the page has fully loaded.

● Inspect AJAX Calls: Monitor network requests and response patterns for dynamic content changes.

● Utilize Selenium: If VBA alone isn’t sufficient, consider integrating Selenium for more advanced interactions. It is not possible to do VBA web scraping Chrome without selenium when you are searching for Element ID’s in XPATH.

● Adjust Timing: Experiment with different delay durations to synchronize with dynamic updates.

● Use SendKeys or getElementById to input credentials directly for the VBA web scraping click button. Simply get the element’s HTML ID by class or by its XPATH to click on buttons.

● Embed the WebBrowser control in a UserForm for interactive login.

● If supported, include credentials in the URL (not recommended for security reasons).

● If available, use an API for authenticated data retrieval.

● For complex authentication flows, consider integrating Selenium with VBA.

VBA itself has limited support for handling AJAX requests directly. However, you can handle AJAX requests in VBA web scraping by:

● Using ReadyState Property

● Leveraging Wait Functions: Use Application.Wait or custom wait functions to pause execution until the AJAX request finishes.

● Inspecting Network Traffic: Analyze the network traffic using browser developer tools to understand AJAX requests and their responses.

Recommended Articles

This has been a guide to VBA Web Scraping. Here we learn How to Scrape Website Data using VBA along with examples. You can learn more from the following articles –

Leave a Reply